Tech giant Meta is experimenting with a new blur feature to protect users from harmful messages sent through DMs.

The company says Instagram users are often bombarded with messages, many of which they wished never came through or even if they did, had a warning attached to them.

Now, the app is all set to make use of machine learning to find a solution to this problem, once and for all, as explained by the company. The feature is yet to be rolled out and is undergoing a trial as we speak.

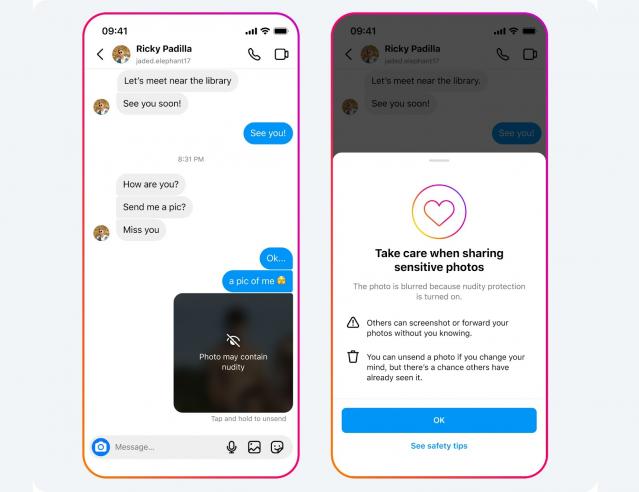

The new AI app would detect explicit graphics entailing nudity that’s sent through the platform’s DM system on the app. All the offensive pictures would then be blurred and marked with labels like a warning stating how the picture entails nudity or could be explicit in nature as per a public post published by the firm on Thursday.

All Meta users will soon see these alerts when messages are sent featuring some theme after the feature gets rolled out.

The whole purpose is to warn the user ahead of time about how unsending pictures do not necessarily mean the receiver has not seen them. Instead, it could even be screenshotted and forwarded to others without the sender knowing.

Any user of the app who wishes to forward or share the flagged pictures on the app would feature a pop-up alert that asks them to behave more responsibly and respectfully before they’re sent off to others, in case they’re sensitive. Moreover, it would still enable them to share the pictures too.

When the app’s users receive the pictures that it feels entail explicit pictures, it would go about blurring it and alerting the user ahead of time with a line or two regarding how there’s no pressure to respond. Moreover, such alerts would even serve as reminders as to how they can block the user from sending them the material. Meta says it wants people to know that they cannot be pressured into doing anything that’s against their will or what makes them feel super uncomfortable all of the time.

At the moment, Meta is busy experimenting with features as it feels blurring pictures and alerting users is a great safeguard for those below the age of 18. Moreover, Meta says it wishes to prevent matters that it’s been dealing with for quite some time now including financial sextortion. The latter is a crime described by the FBI when criminals threaten users into doing something against their will via manipulation. This would then be used against those people unless they pay a certain level of money as ransom.

The company says they’ve really worked long and hard to find a solution to this never-ending problem. The fact that scammers are using this to find and extort so many victims online means creating effective ways to put an end to this, the post added.

The latest features for the app would be enabled by default for minors and the company added how adults need to switch the feature on after going live.

Facebook’s parent firm mentioned how the tool for detecting explicit material on DMs would be available on users’ devices via E2E encryption so the company won’t be able to witness flagged pictures unless it’s reported online as per this new post.

As it is, Instagram already has plenty of privacy features that make it so much more difficult for threat actors and scammers to attain access to users. Moreover, the app already blurs out such images sent to the users’ requests folder, not to mention hiding requests automatically across the menu. So any content deemed questionable gets hidden in a separate folder. They also spoke about a separate set of supervision protocols provided for teen accounts through the company’s Family Center.

So as you can tell, the platform appreciates the app’s efforts designed to blur unwanted pictures or selfies sent out to inboxes. A parent who cannot always see what kids are doing at school could feel more relaxed about these new features, in case they’re not around for supervision.

Read next: Google Commits to Fixing Search Quality Concerns Amidst User Worries