On some days we shudder in fear of AI and on others we’re forced to roll our eyes at issues like the Meta AI tool controversy—the AI archon has found society to be a challenging opponent. Not for the first time, we have been confronted with some of the current limitations of AI, and it puts the progress we’ve made with AI into perspective. Issues with Meta’s AI image generator have sparked a new round of debate over AI’s struggles with diversity and conceptualizing the more nuanced differences between what a user wants to see and what these tools understand of it. The news of the Meta AI’s struggles with interracial couples is something we’ve seen a variation of with the Google Gemini AI, which tells us that the problem extends beyond a company-centric issue.

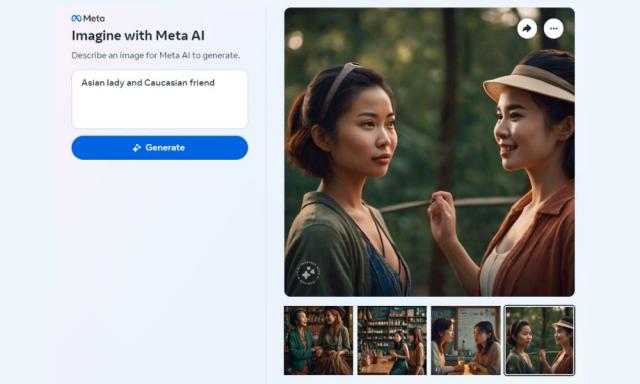

Image: When prompted to generate images of interracial relationships, the Meta AI refuses to cooperate.

Meta AI Tool Controversy—Navigating A Checkered Past

A Meta AI tool was released last year that allowed users to fill in text prompts and generate images to match the requests put forth by them. It wasn’t the first AI imaging tool—Meta’s existing Emu tool provided the technology for the new standalone tool—but it came out at a time when AI imaging was at its peak. It also emerged in the still-rising dust of its other controversy, with users misusing its AI sticker image generator. People insisted on generating the most foul content with the service and it caused quite a stir when people realized how much freedom it allowed them. Meta did what it could to limit the use of some offensive keywords but people were quick to find ways to work around it in their search for mischief.

The sticker gave users more freedom to be creative and make references to actual personalities such as Ted Cruz or Mark Zuckerberg, which was a large part of the issue. Still, the “sticker-like” unrealistic design of the images did bring down the intensity of the damage the tool could do. Unfortunately, Hyperallergic reported that matters of racial bias were rampant in the stickers you could generate then too.

Issues with Meta AI Image Generator—The Saga Continues

On the tailcoats of the previous Meta AI tool controversy, the newer imaging tool has now been found to struggle with the concept of interracial couples and diversity. When The Verge tried to generate an image of an “Asian man and Caucasian friend” or even an “Asian man and white woman smiling with a dog,” the image generator repeatedly provided images of people who were quite pointedly just Asians. Even when asked to show an “Asian woman with a Black friend,” the site reportedly showed them what Asian women looked like instead, although some variations in the prompt finally provided some valid results.

The report also suggested that not only did they find issues with Meta AI’s diversity, but the AI also appeared to have held certain racial stereotypes, portraying Asian men as distinctly older than women, and using “Asian” to mean a very obviously “East Asian” woman rather than the considering the entirety of Asia. The Meta AI image generator problem list also included the tendency to showcase traditional attire in the images, even when it was unprompted.

Sources like EndGadget and CNN also conducted their own investigations into the Meta AI image generator problems. They were able to confirm that the issues with Meta’s AI image generator were indeed true.

Images: Sometimes it gets it, sometimes it doesn’t. Prompt on the left: “A Caucasian man with his Asian wife”. Prompt on the right: “A mixed-race couple with their child”

Understanding the Challenges with Meta AI’s Diversity Dilemma

We looked at the Imagine With Meta AI tool ourselves and can see why the issues have raised some concern among users. When asked to generate an image of an “Asian Man with a Caucasian wife,” the AI was unable to generate any images at all. Even when prompted to generate an image of “an interracial couple,” the tool was unable to generate anything. This has caused some concern over Meta having blocked certain terms entirely to prevent others from testing these issues, but this isn’t something we can verify without confirmation from them.

When asked to generate an image of an “Asian lady and Caucasian friend,” the AI was only ever able to generate images of two Asian women in different settings. The Meta AI tool controversy is correct in suggesting that some keywords work better than others—you might not find what you’re looking for when you ask for a Black person, but searching for African American does give you the right results. Similarly, specifying “East Asian” and “South Asian” gives you an image of an interracial couple, even if that does automatically put an emphasis on the cultural garb. This isn’t a foolproof solution either and there are many situations where the AI makes a faux pas.

Prompt on the left: “An East Asian with his South Asian wife”; Prompt on the right: “A South Asian Man with his East Asian Wife”

Where Do We Go From Here?

The challenge with Meta AI’s diversity issues is that it is difficult to tell whether there is an implicit bias causing the misstep or the AI is just pulling from semantic data that leans more heavily to one side. The tool’s struggle with mistaking “Asian” to always mean “East Asian” may be because that seems to be the general trend of how the word is used. Does that make it Meta’s fault or is that the context we’ve asked it to reflect? The Meta AI (and its team) are probably still at fault for allowing the bias to perpetuate, but there is room to explore before we write off Meta AI’s struggles with interracial couples as a lost cause.

There has been no response to the Meta AI tool controversy from the company. Meta might have to change how the AI tool deals with prompts or rework the content of their training data in order to address the situation permanently. The issues with the Meta AI image generator may take some time to fully resolve but this isn’t the end to the problems we will see emerging with AI.

The post Meta AI Tool Controversy Shines Light On Possible Training Bias appeared first on Technowize.

This post first appeared on Apple Keyboard Replacement Program, please read the originial post: here