Data Fusion is a process that integrates and combines data from multiple sources to produce more comprehensive, accurate, and actionable information than can be obtained from individual data sources alone. It involves merging data from diverse sensors, platforms, and sources to create a unified representation of the underlying phenomenon or environment. Data fusion techniques leverage statistical methods, machine learning algorithms, and domain-specific knowledge to extract meaningful insights, patterns, and relationships from disparate data sources, enabling better decision-making, situational awareness, and intelligence analysis.

Key Components

- Data Sources: Data fusion involves integrating data from various sources, including sensors, databases, IoT devices, social media, and external data feeds. These sources may provide different types of data, such as structured and unstructured data, text, images, videos, and geospatial data.

- Integration Techniques: Data fusion techniques encompass a range of methods for integrating and combining data, including sensor fusion, feature fusion, decision fusion, and model fusion. These techniques may involve data preprocessing, alignment, normalization, fusion rules, aggregation, and inference to merge heterogeneous data sources effectively.

- Information Fusion Levels: Data fusion can occur at different levels, including raw data fusion, feature-level fusion, decision-level fusion, and semantic-level fusion. Each level of fusion aggregates and synthesizes information at different abstraction levels to generate insights and knowledge.

Methodologies and Approaches

Data fusion can be implemented through various methodologies and approaches tailored to the specific needs and objectives of the application or domain.

Statistical Fusion Techniques

Statistical fusion techniques use probabilistic models, Bayesian inference, and statistical estimation methods to combine data from multiple sources and estimate the underlying parameters, distributions, and uncertainties. These techniques are commonly used in sensor fusion, target tracking, and statistical pattern recognition applications.

Machine Learning Fusion Models

Machine learning fusion models leverage supervised, unsupervised, and reinforcement learning algorithms to learn patterns, correlations, and relationships from integrated data and make predictions or decisions based on the learned models. These models include ensemble methods, neural networks, deep learning architectures, and hybrid approaches that combine multiple learning algorithms.

Semantic Fusion Frameworks

Semantic fusion frameworks utilize domain-specific knowledge, ontologies, and semantic models to represent and integrate heterogeneous data from disparate sources based on their semantic meaning, context, and relationships. Semantic fusion enables semantic interoperability, knowledge discovery, and inference in complex and dynamic environments.

Benefits of Data Fusion

Data fusion offers several benefits for organizations and applications involved in integrating and analyzing heterogeneous data sources:

- Improved Situational Awareness: Data fusion provides a comprehensive and coherent representation of the underlying environment, enabling better situational awareness, decision-making, and response coordination in mission-critical applications such as defense, security, and emergency management.

- Enhanced Accuracy and Reliability: By combining data from multiple sources, data fusion improves the accuracy, reliability, and completeness of information compared to individual data sources. Fusion techniques mitigate errors, uncertainties, and biases inherent in individual data sources, leading to more robust and trustworthy insights.

- Multimodal Analysis: Data fusion enables multimodal analysis of heterogeneous data types, including text, images, videos, and sensor data. By integrating different modalities, data fusion facilitates holistic analysis, pattern recognition, and knowledge discovery across diverse data sources, leading to deeper insights and understanding.

- Efficient Resource Utilization: Data fusion optimizes resource utilization by leveraging complementary information from diverse sources to achieve common objectives more efficiently. By combining data streams, fusion techniques reduce redundancy, eliminate duplication, and enhance data utilization in resource-constrained environments.

Challenges in Implementing Data Fusion

Implementing data fusion may face challenges:

- Heterogeneity and Incompatibility: Data fusion must deal with heterogeneity and incompatibility issues arising from differences in data formats, semantics, resolutions, and quality across diverse sources. Integrating heterogeneous data requires data preprocessing, normalization, and alignment to ensure compatibility and consistency.

- Uncertainty and Ambiguity: Data fusion must account for uncertainty, ambiguity, and incompleteness in the integrated data, which may arise from measurement errors, sensor noise, missing data, or conflicting information. Handling uncertainty requires probabilistic modeling, uncertainty quantification, and robust fusion algorithms.

- Scalability and Complexity: Data fusion becomes increasingly challenging as the volume, velocity, and variety of data sources grow, leading to scalability and complexity issues in fusion algorithms, computational resources, and data management. Scalable fusion techniques and distributed processing frameworks are needed to handle large-scale data fusion tasks efficiently.

Strategies for Implementing Data Fusion

To address challenges and maximize the benefits of data fusion, organizations can implement various strategies:

- Data Quality Assurance: Ensure data quality, integrity, and consistency across heterogeneous data sources through data cleansing, validation, and quality assurance processes. Establish data governance policies, standards, and procedures to maintain data quality throughout the fusion lifecycle.

- Interoperability and Standards: Promote interoperability and compatibility among data sources by adopting standardized data formats, protocols, and metadata schemas. Align data fusion efforts with industry standards, interoperability frameworks, and best practices to facilitate seamless integration and exchange of data.

- Modeling and Uncertainty Management: Develop robust fusion models and algorithms that account for uncertainty, variability, and ambiguity in the integrated data. Use probabilistic methods, Bayesian inference, and uncertainty propagation techniques to quantify and propagate uncertainty through the fusion process.

- Domain Knowledge Integration: Incorporate domain-specific knowledge, expertise, and contextual information into the fusion process to enhance the relevance, accuracy, and usefulness of fused data. Collaborate with domain experts, subject matter specialists, and end users to capture domain knowledge and refine fusion models accordingly.

Real-World Examples

Data fusion is applied in various domains and applications to integrate and analyze heterogeneous data sources:

- Surveillance and Security: Data fusion is used in surveillance systems to integrate data from video cameras, radar, acoustic sensors, and social media feeds to detect and track security threats, monitor public safety, and coordinate emergency response activities.

- Healthcare and Biomedicine: Data fusion is applied in healthcare to integrate patient data from electronic health records, medical imaging, wearable sensors, and genomic databases to support clinical decision-making, disease diagnosis, and personalized treatment planning.

- Smart Cities and Urban Planning: Data fusion is employed in smart city initiatives to integrate data from IoT sensors, transportation networks, environmental sensors, and social media platforms to optimize urban planning, improve traffic management, and enhance public services.

Conclusion

Data fusion is a process that integrates and combines data from multiple sources to produce more comprehensive, accurate, and actionable information than can be obtained from individual data sources alone. By leveraging statistical methods, machine learning algorithms, and domain-specific knowledge, data fusion enables organizations to extract meaningful insights, patterns, and relationships from heterogeneous data sources, leading to better decision-making, situational awareness, and intelligence analysis. Despite challenges such as heterogeneity and uncertainty, organizations can implement strategies and best practices to successfully deploy and manage data fusion, maximizing the benefits of improved situational awareness, enhanced accuracy, and efficient resource utilization in diverse domains and applications.

Other examples of merging engineering with internal operational departments

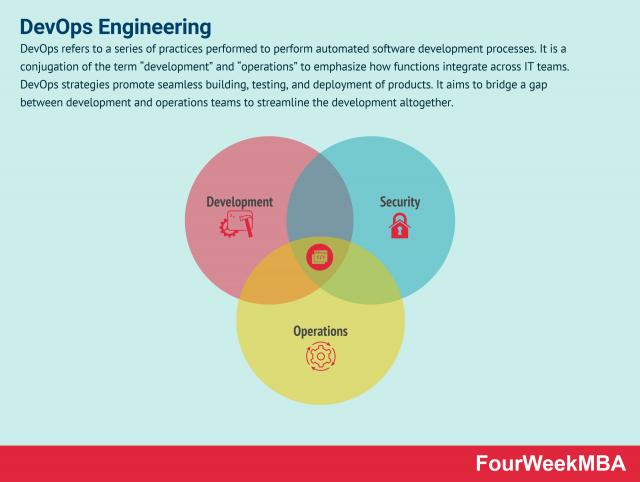

DevOps Engineering

DevSecOps

FullStack Development

MLOps

RevOps

AdOps

Main Free Guides:

- Business Models

- Business Strategy

- Business Development

- Digital Business Models

- Distribution Channels

- Marketing Strategy

- Platform Business Models

- Revenue Models

- Tech Business Models

- Blockchain Business Models Framework

The post Data Fusion appeared first on FourWeekMBA.