Introduction

It’s pretty likely that in the generative AI era (since the release of ChatGPT, to be more precise), you must have at least heard of the term “vector databases.”

It’s okay if you don’t know what they are, as this article is primarily intended to explain everything about Vector databases in detail.

But given how popular they have become lately, I think it is crucial to be aware of what makes them so powerful that they gained so much popularity, and their practical utility not just in LLMs but in other applications as well.

Let’s dive in!

What are vector databases?

Objective

To begin, we must note that vector databases are NOT new.

In fact, they have existed for a pretty long time now. You have been indirectly interacting with them daily, even before they became widely popular lately. These include applications like recommendation systems, and search engines, for instance.

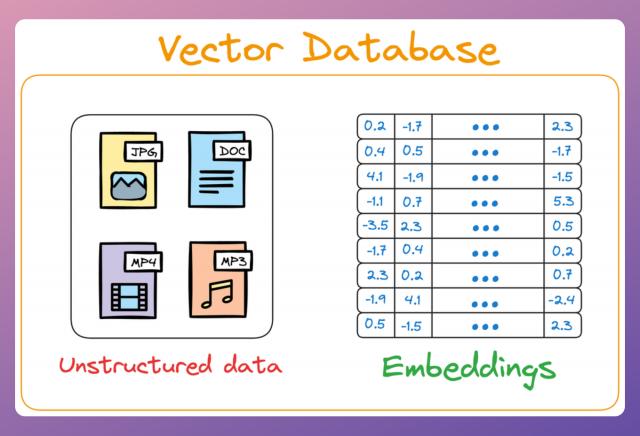

Simply put, a vector database stores unstructured data (text, images, audio, video, etc.) in the form of vector embeddings.

Each data point, whether a word, a document, an image, or any other entity, is transformed into a numerical vector using ML techniques (which we shall see ahead).

This numerical vector is called an embedding, and the model is trained in such a way that these vectors capture the essential features and characteristics of the underlying data.

Considering word embeddings, for instance, we may discover that in the embedding space, the embeddings of fruits are found close to each other, which cities form another cluster, and so on.

This shows that embeddings can learn the semantic characteristics of entities they represent (provided they are trained appropriately).

Once stored in a vector database, we can retrieve original objects that are similar to the query we wish to run on our unstructured data.

In other words, encoding unstructured data allows us to run many sophisticated operations like similarity search, clustering, and classification over it, which otherwise is difficult with traditional databases.

To exemplify, when an e-commerce website provides recommendations for similar items or searches for a product based on the input query, we’re (in most cases) interacting with vector databases behind the scenes.

Before we get into the technical details, let me give you a couple of intuitive examples to understand vector databases and their immense utility.

Example #1

Let's imagine we have a collection of photographs from various vacations we’ve taken over the years. Each photo captures different scenes, such as beaches, mountains, cities, and forests.

Now, we want to organize these photos in a way that makes it easier to find similar ones quickly.

Traditionally, we might organize them by the date they were taken or the location where they were shot.

However, we can take a more sophisticated approach by encoding them as vectors.

More specifically, instead of relying solely on dates or locations, we could represent each photo as a set of numerical vectors that capture the essence of the image.

Let’s say we use an algorithm that converts each photo into a vector based on its color composition, prominent shapes, textures, people, etc.

Each photo is now represented as a point in a multi-dimensional space, where the dimensions correspond to different visual features and elements in the image.

Now, when we want to find similar photos, say, based on our input text query, we encode the text query into a vector and compare it with image vectors.

Photos that match the query are expected to have vectors that are close together in this multi-dimensional space.

Suppose we wish to find images of mountains.

In that case, we can quickly find such photos by querying the vector database for images close to the vector representing the input query.

A point to note here is that a vector database is NOT just a database to keep track of embeddings.

Instead, it maintains both the embeddings and the raw data which generated those embeddings.

Why is that necessary, you may wonder?

Considering the above image retrieval task again, if our vector database is only composed of vectors, we would also need a way to reconstruct the image because that is what the end-user needs.

When a user queries for images of mountains, they would receive a list of vectors representing similar images, but without the actual images.

By storing both the embeddings (the vectors representing the images) and the raw image data, the vector database ensures that when a user queries for similar images, it not only returns the closest matching vectors but also provides access to the original images.

Example #2

In this example, consider an all-text unstructured data, say thousands of news articles, and we wish to search for an answer from that data.

Traditional search methods rely on exact keyword search, which is entirely a brute-force approach and does not consider the inherent complexity of text data.

In other words, languages are incredibly nuanced, and each language provides various ways to express the same idea or ask the same question.

For instance, a simple inquiry like "What's the weather like today?" can be phrased in numerous ways, such as "How's the weather today?", "Is it sunny outside?", or "What are the current weather conditions?".

This linguistic diversity makes traditional keyword-based search methods inadequate.

As you may have already guessed, representing this data as vectors can be pretty helpful in this situation too.

Instead of relying solely on keywords and following a brute-force search, we can first represent text data in a high-dimensional vector space and store them in a vector database.

When users pose queries, the vector database can compare the vector representation of the query with that of the text data, even if they don't share the exact same wording.

How to generate embeddings?

At this point, if you are wondering how do we even transform words (strings) into vectors (a list of numbers), let me explain.

We also covered this in a recent newsletter issue here but not in much detail, so let’s discuss those details here.

If you already know what embedding models are, feel free to skip this part.

To build models for language-oriented tasks, it is crucial to generate numerical representations (or vectors) for words.

This allows words to be processed and manipulated mathematically and perform various computational operations on words.

The objective of embeddings is to capture semantic and syntactic relationships between words. This helps machines understand and reason about language more effectively.

In the pre-Transformers era, this was primarily done using pre-trained static embeddings.

Essentially, someone would train embeddings on, say, 100k, or 200k common words using deep learning techniques and open-source them.

Consequently, other researchers would utilize those embeddings in their projects.

The most popular models at that time (around 2013-2017) were:

- Glove

- Word2Vec

- FastText, etc.

These embeddings genuinely showed some promising results in learning the relationships between words.

For instance, at that time, an experiment showed that the vector operation (King - Man) + Woman returned a vector near the word Queen.

That’s pretty interesting, isn’t it?

In fact, the following relationships were also found to be true:

Paris - France + Italy≈RomeSummer - Hot + Cold≈WinterActor - Man + Woman≈Actress- and more.

So, while these embeddings captured relative word representations, there was a major limitation.

Consider the following two sentences:

- Convert this data into a table in Excel.

- Put this bottle on the table.

Here, the word “table” conveys two entirely different meanings:

- The first sentence refers to a “data” specific sense of the word “table.”

- The second sentence refers to a “furniture” specific sense of the word “table.”

Yet, static embedding models assigned them the same representation.

Thus, these embeddings didn’t consider that a word may have different usages in different contexts.

But this was addressed in the Transformer era, which resulted in contextualized embedding models powered by Transformers, such as:

- BERT: A language model trained using two techniques:

- Masked Language Modeling (MLM): Predict a missing word in the sentence, given the surrounding words.

- Next Sentence Prediction (NSP).

- We shall discuss it in a bit more detail shortly.

- DistilBERT: A simple, effective, and lighter version of BERT, which is around 40% smaller:

- Utilizes a common machine learning strategy called student-teacher theory.

- Here, the student is the distilled version of BERT, and the teacher is the original BERT model.

- The student model is supposed to replicate the teacher model’s behavior.

- If you want to learn how this is implemented practically, we discussed it here:

- SentenceTransformer: If you read the most recent deep dive on building classification models on ordinal data, we discussed this model there.

- Essentially, the SentenceTransformer model takes an entire sentence and generates an embedding for that sentence.

- This differs from the BERT and DistilBERT models, which produce an embedding for all words in the sentence.

There are more models, but we won't go into more detail here, and I hope you get the point.

The idea is that these models are quite capable of generating context-aware representations, thanks to their self-attention mechanism and appropriate training mechanism.

BERT

For instance, if we consider BERT again, we discussed above that it uses the masked language modeling (MLM) technique and next sentence prediction (NSP).

These steps are also called the pre-training step of BERT because they involve training the model on a large corpus of text data before fine-tuning it on specific downstream tasks.

Moving on, let’s see how the pre-training objectives of masked language modeling (MLM) and next sentence prediction (NSP) help BERT generate embeddings.

#1) Masked Language Modeling (MLM)

- In MLM, BERT is trained to predict missing words in a sentence. To do this, a certain percentage of words in most (not all) sentences are randomly replaced with a special token,

[MASK].

- BERT then processes the masked sentence bidirectionally, meaning it considers both the left and right context of each masked word, that is why the name “Bidirectional Encoder Representation from Transformers (BERT).”

- For each masked word, BERT predicts what the original word is supposed to be from its context. It does this by assigning a probability distribution over the entire vocabulary and selecting the word with the highest probability as the predicted word.

- During training, BERT is optimized to minimize the difference between the predicted words and the actual masked words, using techniques like cross-entropy loss.

#2) Next Sentence Prediction (NSP)

- In NSP, BERT is trained to determine whether two input sentences appear consecutively in a document or whether they are randomly paired sentences from different documents.

- During training, BERT receives pairs of sentences as input. Half of these pairs are consecutive sentences from the same document (positive examples), and the other half are randomly paired sentences from different documents (negative examples).

- BERT then learns to predict whether the second sentence follows the first sentence in the original document (

label 1) or whether it is a randomly paired sentence (label 0). - Similar to MLM, BERT is optimized to minimize the difference between the predicted labels and the actual labels, using techniques like binary cross-entropy loss.

Now, let's see how these pre-training objectives help BERT generate embeddings:

- MLM: By predicting masked words based on their context, BERT learns to capture the meaning and context of each word in a sentence. The embeddings generated by BERT reflect not just the individual meanings of words but also their relationships with surrounding words in the sentence.

- NSP: By determining whether sentences are consecutive or not, BERT learns to understand the relationship between different sentences in a document. This helps BERT generate embeddings that capture not just the meaning of individual sentences but also the broader context of a document or text passage.

With consistent training, the model learns how different words relate to each other in sentences. It learns which words often come together and how they fit into the overall meaning of a sentence.

This learning process helps BERT create embeddings for words and sentences, which are contextualized, unlike earlier embeddings like Glove and Word2Vec:

Contextualized means that the embedding model can dynamically generate embeddings for a word based on the context they were used in.

As a result, if a word would appear in a different context, the model would return a different representation.

This is precisely depicted in the image below for different uses of the word Bank.

For visualization purposes, the embeddings have been projected into 2d space using t-SNE.

As depicted above, the static embedding models — Glove and Word2Vec produce the same embedding for different usages of a word.

However, contextualized embedding models don’t.

In fact, contextualized embeddings understand the different meanings/senses of the word “Bank”:

- A financial institution

- Sloping land

- A Long Ridge, and more.

As a result, these contextualized embedding models address the major limitations of static embedding models.

The point of the above discussion is that modern embedding models are quite proficient at the encoding task.

As a result, they can easily transform documents, paragraphs, or sentences into a numerical vector that captures its semantic meaning and context.

Querying a vector database

In the last to last sub-section, we provided an input query, which was encoded, and then we searched the vector database for vectors that were similar to the input vector.

In other words, the objective was to return the nearest neighbors as measured by a similarity metric, which could be:

- Euclidean distance (the lower the metric, the more the similarity).

- Manhattan distance (the lower the metric, the more the similarity).

- Cosine similarity (the more the metric, the more the similarity).

The idea resonates with what we do in a typical k-nearest neighbors (kNN) setting.

We can match the query vector across the already encoded vectors and return the model similar ones.

The problem with this approach is that to find, say, only the first nearest neighbor, the input query must be matched across all vectors stored in the vector database.

This is computationally expensive, especially when dealing with large datasets, which may have millions of data points. As the size of the vector database grows, the time required to perform a nearest neighbor search increases proportionally.

But in scenarios where real-time or near-real-time responses are necessary, this brute-force approach becomes impractical.

In fact, this problem is also observed in typical relational databases. If we were to fetch rows that match a particular criteria, the whole table must be scanned.

Indexing the database provides a quick lookup mechanism, especially in cases where near real-time latency is paramount.

More specifically, when columns used in WHERE clauses or JOIN conditions are indexed, it can significantly speed up query performance.

A similar idea of indexing is also utilized in vector databases, which results in something we call an approximate nearest neighbor (ANN), which is quite self-explanatory, isn't it?

Well, the core idea is to have a tradeoff between accuracy and run time. Thus, approximate nearest neighbor algorithms are used to find the closest neighbors to a data point, though these neighbors may not always be the closest neighbors.

That is why they are also called non-exhaustive search algorithms.

The motivation is that when we use vector search, exact matches aren't absolutely necessary in most cases.

Approximate nearest neighbor (ANN) algorithms utilize this observation and compromise a bit of accuracy for run-time efficiency.

Thus, instead of exhaustively searching through all vectors in the database to find the closest matches, ANN algorithms provide fast, sub-linear time complexity solutions that yield approximate nearest neighbors.

Let’s discuss these techniques in the next section!

Approximate nearest neighbors (ANN)

While approximate nearest neighbor algorithms may sacrifice a certain degree of precision compared to exact nearest neighbor methods, they offer significant performance gains, particularly in scenarios where real-time or near-real-time responses are required.

The core idea is to narrow down the search space for the query vector, thereby improving the run-time performance.

The search space is reduced with the help of indexing. There are five popular indexing strategies here.

Let’s go through each of them.

#1) Flat Index

Flat index is another name for the brute-force search we saw earlier, which is also done by KNN. Thus, all vectors are stored in a single index structure without any hierarchical organization.

That is why this indexing technique is called “flat” – it involves no indexing strategy, and stores the data vectors as they are, i.e., in a ‘flat’ data structure.

As it searches the entire vector database, it delivers the best accuracy of all indexing methods we shall see ahead. However, this approach is incredibly slow and impractical.

Nonetheless, I wouldn’t recommend adopting any other sophisticated approach over a flat index when the data conditions are favorable, such as having only a few data points to search over and a low-dimensional dataset.

But, of course, not all datasets are small, and using a flat index is impractical in most real-life situations.

Thus, we need more sophisticated approaches to index our vectors in the vector database.

#2) Inverted File Index

IFV is probably one of the most simple and intuitive indexing techniques. While it is commonly used in text retrieval systems, it can be adapted to vector databases for approximate nearest neighbor searches.

Here’s how!

Given a set of vectors in a high-dimensional space, the idea is to organize them into different partitions, typically using clustering algorithms like k-means.

As a result, each partition has a corresponding centroid, and every vector gets associated with only one partition corresponding to its nearest centroid.

Thus, every centroid maintains information about all the vectors that belong to its partition.

When searching for the nearest vector to the query vector, instead of searching across all vectors, we first find the closest centroid to the query vector.

The nearest neighbor is then searched in only those vectors that belong to the partition of the closest centroid found above.

Let’s estimate the search run-time difference it provides over using a flat index.

To reiterate, in flat-index, we compute the similarity of the query vector with all the vectors in the vector database.

If we have $N$ vectors and each vector is $D$ dimensional, the run-time complexity is $O(ND)$ to find the nearest vector.

Compare it to the Inverted File Index, wherein, we first compute the similarity of the query vector with all the centroids obtained using the clustering algorithm.

Let’s say there are $k$ centroids, a total of $N$ vectors, and each vector is $D$ dimensional.

Also, for simplicity, let’s say that the vectors are equally distributed across all partitions. Thus, each partition will have $\frac{N}{k}$ data points.

First, we compute the similarity of the query vector with all the centroids, whose run-time complexity is $O(k)$.

Next, we compute the similarity of the query vector to the data points that belong to the centroid’s partition, with a run-time complexity of $O(\frac{N}{k})$.

Thus, the overall run-time complexity comes out to be $O(k+\frac{N}{k})$.

To get some perspective, let’s assume we have 10M vectors in the vector databases and divide that into $k=100$ centroids. Thus, each partition is expected to have roughly 1 lakh data points.

In the flat index, we shall compare the input query across all data points – 10M.

In IVF, first, we shall compare the input query across all centroids (50), and then compare it to the vectors in the obtained partition (1 lakh). Thus, the total comparisons, in this case, will be 100,050, nearly 100 times faster.

Of course, it is essential to note that if some vectors are actually close to the input vector but still happen to be in the neighboring partition, we will miss them.

But recalling our objective, we were never aiming for the best solution but an approximate best (that's why we call it “approximate nearest neighbors”), so this accuracy tradeoff is something we willingly accept for better run-time performance.

In fact, if you remember the model compression deep dive, we followed the same idea there as well:

#3) Product Quantization

The idea of quantization in general refers to compressing the data while preserving the original information.

Thus, Product Quantization (PQ) is a technique used for vector compression for memory-efficient nearest neighbor search.

Let’s understand how it works in detail.

Step 1) Create data segments

Say we have some vectors, and each vector is 256-dimensional. Assuming each dimension is represented by a number that takes 32 bits, the memory consumed by each vector would be 256 x 32 bits = 8192 bits.

In Product Quantization (PQ), we first split all vectors into sub-vectors. A demonstration is shown below, where we split the vector into M (a parameter) segments, say 8:

As a result, each segment will be 32-dimensional.

Step 2) Run KMeans

Next, we run KMeans on each segment separately, which will generate k centroids for each segment.

Do note that each centroid will represent the centroid for the subspace (32 dimensions) but not the entire vector space (256 dimensions in this demo).

For instance, if k=100, this will generate 100*8 centroids in total.

Training complete.

Step 3) Encode vectors

Next, we move to the encoding step.

The idea is that for each segment of a vector in the entire database, we find the nearest centroid from the respective segment, which has k centroids that were obtained in the training step above.

For instance, consider the first segment of the 256-dimensional data we started with:

We compare these segment vectors to the corresponding k centroids and find the nearest centroid for all segment vectors:

After obtaining the nearest centroid for each vector segment, we replace the entire segment with a unique centroid ID, which can be thought of as indices (a number from 0 to k-1) of the centroids in that sub-space.

We do see for all individual segments of the vectors:

This provides us with a quantized (or compressed) representation of all vectors in the vector database, which is composed of centroid IDs, and they are also known as PQ codes.

To recall, what we did here is that we’ve encoded all the vectors in the vector database with a vector of centroid IDs, which is a number from 0 to k-1, and every dimension now only consumes 8 bits of memory.

As there are , segments, total memory consumed is 8*8=64 bits, which is 128 times lower memory usage than what we had earlier – 8192 bits.

The memory saving scales immensely well when we are dealing with millions of vectors.

Of course, the encoded representation isn’t entirely accurate, but don’t worry about that as it is not that important for us to be perfectly precise on all fronts.

Step 4) Find an approximate nearest neighbor

Now, you might be wondering, how exactly do we search for the nearest neighbor based on the encoded representations?

More specifically, given a new query vector Q, we have to find a vector that is most similar (or closest) to Q in our database.

We begin by splitting the query vector Q into M segments, as we did earlier.