Traffic Bots: Boosting Website Traffic or Misleading Strategy?

Traffic bots have become a frequently discussed topic in the online world. These programs, or simply "bots," are designed to generate traffic to websites with various intentions. Understanding how these bots work is crucial for anyone exploring the realm of online traffic generation and digital marketing.

Firstly, it's important to comprehend what exactly traffic bots are. Essentially, traffic bots are software applications that simulate human web interactions. They mimic user behavior, visiting websites, clicking on links, filling out forms, and more. However, contrary to genuine website visitors who engage naturally, traffic bots operate programmatically.

One key aspect of traffic bots is their purpose. Although they can be utilized for positive reasons like website analytics, SEO optimization testing, and website load testing, there is also a darker side. In some cases, bots may serve malicious intentions such as inflating website traffic statistics or generating fraudulent ad impressions.

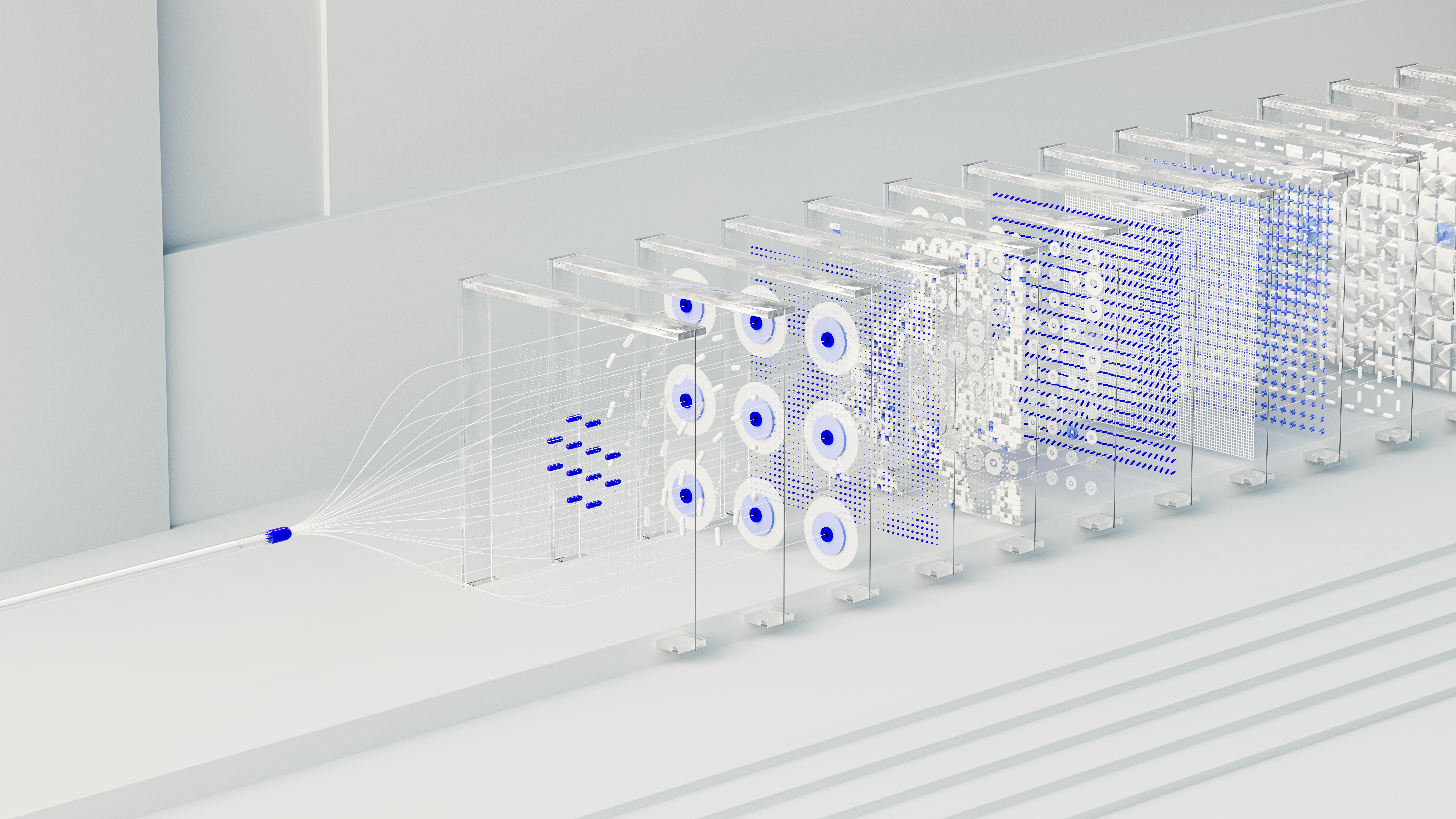

The mechanics behind how traffic bots operate can vary, but they generally rely on automation and data parsing. Some traffic bots follow predetermined scripts that execute specific actions when visiting a website. Others are more advanced, utilizing artificial intelligence or machine learning algorithms to adapt to different scenarios encountered during their internet journeys.

To function effectively, traffic bots often require proxies or virtual private networks (VPNs). These tools enable the bot's IP address to change as it interacts with various websites. By rotating through IP addresses, these bots attempt to disguise themselves as multiple users, making their actions seem like legitimate human activity.

Additionally, traffic bots can adopt 'headless browser' technology which allows them to access and render web pages just like regular browsers do. This further enhances their ability to successfully mimic human behavior by interacting with web elements like buttons, forms, cookies, and other similar components.

It's worth noting that deploying or implementing traffic bots ethically is essential. Engaging in malicious practices using these bots violates ethical guidelines, infringes on website owners' rights, and may even carry legal consequences. Companies offering web-related services often implement security measures to identify and block traffic bot visits, mitigating their disruptive impact.

For website owners or digital marketers, understanding traffic bots can be beneficial. Analyzing traffic patterns, distinguishing between genuine users and bots, and implementing suitable security measures are vital in maintaining a healthy online presence.

Ultimately, while traffic bots can be a valuable tool for analytics and testing, it is crucial to approach their usage responsibly and ethically to ensure the integrity of web operations and user experiences.

The Implications of Using Traffic Bots on SEO and Web RankingsUsing traffic bots can have significant implications on SEO (Search Engine Optimization) and web rankings. Before diving into the topic, it's essential to understand what traffic bots are. In simple terms, they are automated software or programs designed to simulate human-like website visits, interactions, and browsing behavior.

The Implications of Using Traffic Bots on SEO and Web RankingsUsing traffic bots can have significant implications on SEO (Search Engine Optimization) and web rankings. Before diving into the topic, it's essential to understand what traffic bots are. In simple terms, they are automated software or programs designed to simulate human-like website visits, interactions, and browsing behavior.First, it's crucial to highlight some potential benefits of using traffic bots. These tools claim to boost website traffic, visibility, and engagement artificially. By generating fake visits, clicks, and dwell time, they aim to manipulate analytics data to give the appearance of increased popularity and activity on a site. Additionally, some proponents argue that traffic bots can potentially attract real visitors due to increased visibility through higher search engine rankings.

However, the use of traffic bots in SEO practices has several unfavorable implications.

1. Questionable Quality: Traffic generated by bots is not genuine user engagement. As search engines like Google increasingly prioritize user experience and intent, bot-generated visits are quickly recognized as artifice. Consequently, rankings based on these artificial interactions may not positively impact organic traffic or overall website performance.

2. Increased Bounce Rates: Traffic bots tend to create high bounce rates as they rapidly navigate through pages without engaging with content or carrying out meaningful actions. Search engines interpret this behavior as users quickly leaving the website after landing, signaling poor quality or irrelevant content. High bounce rates can adversely affect search engine rankings since it assumes a lack of relevance or fulfillment of user queries.

3. Negative User Experience: Genuine user satisfaction is critical in SEO efforts since it affects website credibility, user retention, and brand loyalty. When real users encounter poor-quality websites or insignificant content due to misleading traffic analytics generated by bots, their experience is negatively impacted. This may lead to decreased click-through rates, reduced conversions, and even reputation damage.

4. Risk of Penalties: Major search engines continually refine their algorithms to identify anomalies associated with bot-driven activities. These algorithms differentiate between genuine user behavior and bot-generated patterns. Engaging in these activities violates search engine guidelines, risking penalties, such as lowered search rankings or complete deindexing.

5. Misallocation of Resources: Investing time, effort, and money on traffic bots can divert resources from holistic SEO practices that produce sustainable, long-term growth. Effective SEO encompasses optimizing website structure, developing valuable content, earning credible backlinks, enhancing user experience, and maintaining appropriate technical standards—focusing on traffic bots neglects these essential elements.

6. Loss of Trust and Credibility: Authenticity, trustworthiness, and reputability play crucial roles in establishing relationships between websites and visitors. When a website violates these principles by artificially boosting figures through traffic bots, it risks losing trust and credibility with both users and search engines.

In conclusion, the use of traffic bots may promise short-term traffic boosts or higher rankings. However, the long-term implications for SEO and web rankings are significant. Search engines continue evolving, focusing on genuine user experiences while swiftly recognizing artificial or deceptive tactics. Emphasizing sustainable SEO practices backed by quality content, engaging user experience, and ethical approaches remains essential for long-term online success.

Ethics in Traffic Generation: Where Do Traffic Bots Stand?Ethics in Traffic Generation: Where Do traffic bots Stand?

Ethics in Traffic Generation: Where Do Traffic Bots Stand?Ethics in Traffic Generation: Where Do traffic bots Stand?The world of online marketing and website promotion is constantly evolving, with new techniques and technologies emerging regularly. One such approach that has gained popularity in recent years is the use of traffic bots, which are automated software programs designed to generate traffic to your website. While traffic bots may seem like a promising solution for increasing online visibility, it is essential to consider the ethics surrounding their use.

First and foremost, it's important to understand that there is a clear distinction between legitimate, ethical traffic generation methods and unethical practices. Ethical traffic generation involves attracting real human visitors to your website through means like search engine optimization (SEO), content creation, social media marketing, and natural backlinks. These methods focus on providing real value to users and building genuine engagement.

However, traffic bots often drift into ethically questionable territory. These bots typically simulate a high volume of website visits by generating artificial traffic. They often employ proxies or fake IP addresses to boost the number of hits on your site. While they can create an initial impression of increased visitors or popularity, these visits are artificial and lack any meaningful user engagement.

One significant ethical concern with using traffic bots revolves around deceptive practices. Generating artificial traffic misrepresents the true popularity and activity levels of your website. This becomes problematic because businesses rely on accurate data when making decisions regarding investments, partnerships, or advertising opportunities. Falsely inflating visitor numbers can mislead stakeholders and harm the credibility of website metrics.

Another ethical issue is related to the disproportionate advantage gained by using traffic bots. Organic growth through diligently crafted content and genuine online engagement may take time and effort, but it helps build trust with actual users who value authenticity. In contrast, employing traffic bots unfairly skews competition by creating an illusionary advantage over competitors who follow ethical practices. This undermines fair market dynamics and can lead to reputational damage if exposed.

In addition to ethical concerns, the use of traffic bots also has legal implications. Many platforms and online services explicitly prohibit the use of bots as it violates terms of service. Engaging in unethical practices can result in penalties, account suspensions, or even legal consequences for dishonest business activities. It is vital to consider these potential consequences before employing traffic bots as a traffic generation strategy.

In conclusion, while traffic bots may offer a tempting shortcut for boosting website traffic, they carry numerous ethical and legal concerns. Artificially inflating visitor numbers misrepresents the reality of website popularity and undermines fair competition. Using such tactics can lead to reputational damage, legal consequences, and loss of credibility in the eyes of your audience. In contrast, ethical traffic generation methods focus on authentic engagement and sustainable growth that not only builds trust but also drives long-term success.

Distinguishing Between Good and Bad Bots: Navigating the Online WorldIn the digital realm, traffic bots have become an integral part of web interactions, both contributing to various tasks and posing challenges for websites. While it would be convenient to differentiate between good bots and bad ones easily, the reality is often more complex. Appreciating the nuances is essential to navigate the online world effectively. Here's some information to help distinguish between these two types of bots:

Distinguishing Between Good and Bad Bots: Navigating the Online WorldIn the digital realm, traffic bots have become an integral part of web interactions, both contributing to various tasks and posing challenges for websites. While it would be convenient to differentiate between good bots and bad ones easily, the reality is often more complex. Appreciating the nuances is essential to navigate the online world effectively. Here's some information to help distinguish between these two types of bots:Good Bots:

1. Search Engine Bots: Search engines like Google employ automated bots (commonly known as crawlers or spiders) to index websites, allowing them to appear in search results. These bots generally obey rules outlined by website owners.

2. Monitoring Bots: Websites may use monitoring bots to gather data on performance, accessibility, and security. These legitimate bots assess if a site is functioning optimally and report any issues.

3. Aggregator Bots: Some bots aggregate information from several sources for news or research purposes, providing users with comprehensive content in one location.

Bad Bots:

1. Scrapers and Content Thieves: Malicious actors develop bots that scrape content from other websites without permission, attempting to plagiarize or profit from it illegitimately.

2. Impersonators and Fake Accounts: Bots can be designed to impersonate real users, creating fake accounts on social media for spamming, phishing, or spreading misinformation.

3. Credential Stuffing: These nefarious bots exploit username and password combinations from previous data breaches by repeatedly trying them on different sites, aiming to gain unauthorized access.

Differentiation Challenges:

1. Intent Ambiguity: Determining whether a bot's intent is malicious or benign can be difficult as the same techniques used by good bots can also be exploited by bad ones.

2. Evolving Strategies: Bad bot developers are continually adapting their methods to bypass security measures employed by websites and services, making it challenging to identify them consistently.

Detection Strategies:

1. Behavior Analysis: Observing browsing patterns, interaction frequency, and request origin can help identify suspicious behavior that distinguishes bad bots.

2. User-Agent Analysis: Analyzing the information provided within a bot's user-agent string, such as browser and operating system details, can give insights into its authenticity.

3. CAPTCHAs and Rate Limits: Implementing CAPTCHAs or applying rate limits can hinder the progress of bad bots, as they are not proficient at solving CAPTCHAs or are unable to circumvent stringent rate restrictions.

The ability to distinguish between good and bad bots is an ongoing challenge. Websites often adopt a multi-tiered approach utilizing various tools and methods to filter out malicious bots while allowing well-intentioned ones to contribute positively. Continuous monitoring, adaptive security measures, and proactive response strategies are crucial in maintaining a healthy online ecosystem.

The Impact of AI and Machine Learning on the Evolution of Traffic BotsThe Impact of AI and Machine Learning on the Evolution of traffic bots

The Impact of AI and Machine Learning on the Evolution of Traffic BotsThe Impact of AI and Machine Learning on the Evolution of traffic botsArtificial Intelligence (AI) and Machine Learning (ML) have been revolutionizing various industries, and their impact on the evolution of traffic bots is no exception. Traffic bots, essentially software programs designed to mimic human interaction online, have immensely benefited from these technological advancements. Let us delve into the ways AI and ML have influenced the growth and sophistication of traffic bots.

Firstly, AI has allowed traffic bots to become more intelligent and adaptive. Traditional traffic bots would follow predefined patterns, making them easily detectable and ineffective against evolving security measures. However, with the integration of AI algorithms, traffic bots can now learn from past experiences, adjust their behaviors accordingly, and mimic human-like characteristics. This enhanced intelligence greatly improves the stealthiness and effectiveness of these bots.

Furthermore, ML plays an essential role in optimizing the capabilities of traffic bots. By employing ML techniques, traffic bots can analyze vast amounts of data collected during their interactions, identify patterns, and extract useful insights. These insights enable the bots to understand user behavior better, enhance performance metrics such as click-through rates or conversion rates, and tinker with tactics for improved results. ML's ability to continuously learn and adjust without explicit programming significantly amplifies the efficiency of traffic bots.

AI and ML also contribute to mitigating bot detection efforts. As websites implement advanced security measures to differentiate between humans and bots, AI algorithms assist in designing more sophisticated evasion techniques. Exploring various strategies while incorporating user-like behaviors helps traffic bots evade detection systems adeptly.

Additionally, AI-powered Natural Language Processing (NLP) significantly empowers traffic bots in conversational interactions with humans. Instead of employing rigid pre-scripted responses, NLP facilitates machinery in understanding, interpreting, and generating human language. Consequently, this makes traffic bots more capable of engaging users seamlessly within chat systems or customer support interfaces.

However, it's important to acknowledge that the evolution of traffic bots fueled by AI and ML also raises concerns around ethics, security, and privacy. Malicious actors might exploit AI-enhanced bots for deceptive purposes, jeopardizing online transactions or spreading misinformation. Efficient bot detection mechanisms are essential to maintain a level playing field and a trustworthy online environment.

In conclusion, AI and ML have profoundly impacted the evolution of traffic bots. From improved intelligence and adaptability to optimization and evasion capabilities, these technologies have elevated traffic bots to become more efficient, productive, and context-aware. While there are ethical concerns associated with their usage, the ongoing advancements in AI and ML will undoubtedly continue shaping the future of traffic bots in various domains.

Analyzing the Risks: Security Concerns Associated with Traffic BotsAnalyzing the Risks: Security Concerns Associated with traffic bots

Analyzing the Risks: Security Concerns Associated with Traffic BotsAnalyzing the Risks: Security Concerns Associated with traffic botsThe rise of automation and the use of bots in various online activities have been both a blessing and a curse. Traffic bots, specifically, have become a widespread tool utilized by website owners, businesses, marketers, and even cyber attackers. These automated bot systems can simulate visits to websites, enhance SEO performance, increase ad impressions, or even launch DDoS attacks. While traffic bots can offer benefits, it's crucial to carefully consider the security risks associated with their usage.

One significant concern linked to traffic bots is their potential to infringe upon an individual's privacy. For instance, some bots enable website owners or marketers to record visitors' IP addresses, track their browsing behavior patterns, and extract sensitive information like email addresses without proper consent or transparency. It raises concerns about privacy breaches, data misuse, targeted advertisements without user knowledge, and even potential identity theft.

Moreover, malicious actors often deploy traffic bots in more dubious ways. For example, hackers might employ compromised devices or botnets (networks of infected computers) to generate bot traffic with malignant intentions. Such activities include spreading malware through drive-by downloads or executing DDoS attacks by overwhelming servers with fake traffic. In extreme cases on social media platforms, these bots can manipulate trends, influence public opinion through fake accounts or amplify false information.

The high prevalence of traffic bots can also detrimentally impact businesses dependent on accurate metrics for decision-making processes. By distorting web analytics and metrics that provide insights into user behavior and engagement rates, bots invalidate the usefulness of such data. As a result, businesses might make misinformed decisions based on artificially inflated numbers rather than genuine user interaction.

Another concern relates to the comment sections of websites and forums. Traffic bots often impersonate users to post spam comments or irrelevant content solely intended to promote other websites or products. This not only clutters up legitimate discussion threads but also harms a website's credibility and user experience. These spam comments can contain malicious links or phishing attempts, which exposes users to security risks.

Furthermore, search engine algorithms are designed to gauge web traffic as a ranking signal. As traffic bots manipulate these rankings by artificially increasing website visits, it negatively impacts the integrity of search results. Users are then presented with misleading information, affecting their overall user experience and even influencing decision-making processes.

To mitigate the security concerns associated with traffic bots, it is crucial to implement appropriate security measures. Using CAPTCHA or other human verification methods can help minimize malicious bot activity. Regular website security audits, such as checking for excessive IP addresses from suspicious sources, can aid in identifying and blocking illegitimate bot traffic. Additionally, ensuring user data privacy is of utmost importance, so organizations must be transparent about data collection practices and comply with relevant privacy legislation.

Understanding the risks and taking proactive steps to combat them is essential when dealing with traffic bots. By doing so, website owners, businesses, and online platforms can maintain a safer, more secure environment while delivering an enhanced user experience to genuine visitors.

Real Traffic vs. Bot Traffic: Identifying the Differences and Their ImportanceIn the digital world today, website traffic bot plays a significant role in determining the success of an online business. It involves two main types: Real Traffic and Bot Traffic. Both have distinctive characteristics and understanding these differences is crucial for website owners and marketers. Let's dive into the contrasting attributes of each type and explore why identifying them is essential.

Real Traffic vs. Bot Traffic: Identifying the Differences and Their ImportanceIn the digital world today, website traffic bot plays a significant role in determining the success of an online business. It involves two main types: Real Traffic and Bot Traffic. Both have distinctive characteristics and understanding these differences is crucial for website owners and marketers. Let's dive into the contrasting attributes of each type and explore why identifying them is essential.Real traffic refers to the genuine visitors who access a website through various means. These individuals are human beings using web browsers to interact with the site, driven by their interests, needs, or curiosity. Real traffic can be further segmented into organic (visitors coming directly from search engines), referral (traffic from external websites), or direct (users typing the website URL directly into their browser). Real traffic leaves traces, and their activities on a website can provide invaluable insights to understand user behavior, preferences, purchasing patterns, and more.

On the other hand, bot traffic comprises visits from automated software applications commonly known as bots. These bots are programmed to perform specific actions on websites effectively. They can simulate user engagement such as clicking on links, filling out forms, making purchases, or even impersonating regular human browsing behavior. These bots can be benign or malicious in nature depending on their intentions. Bot traffic can arise from various sources, including search engine crawlers that index web pages for search results, social media scrapers collecting data for analytics or posting automated content, or malicious bots attempting fraud or hacking activities.

Identifying real traffic from bot traffic is paramount due to several reasons.

1. Accurate Analytics: Understanding the actual number of real visitors allows for precise reporting and analysis. If bot traffic remains unidentified, it might skew important metrics like total visits, page views, conversion rates, bounce rates, and more.

2. Improved Marketing Strategies: Distinguishing between real and bot traffic provides marketers clarity in analyzing the effectiveness of campaigns and understanding customer segments better. By highlighting visitor demographics such as region of origin, time spent on pages, or device usage, marketing campaigns can be tailored accordingly to better target potential customers.

3. Enhanced Security: Differentiating between bot traffic and real users is crucial to identify potential security breaches or fraudulent activities. Unwanted bots can lead to content scraping, ad fraud, brute force attacks, or even distributed denial of service (DDoS) attacks. By actively monitoring and filtering these malicious bots, website owners can protect themselves and their users from these threats.

4. Cost Optimization: Accurate identification of bot traffic allows website owners to allocate resources efficiently. For instance, they can optimize server load, bandwidth usage, or content delivery networks by differentiating between genuine visitors and the resource-consuming bots that serve no productive purpose.

5. Legal Compliance: Several legal frameworks, such as the General Data Protection Regulation (GDPR), require organizations to track and provide consent options to website visitors. Companies need to fulfill these legal obligations by accurately gauging authentic user interactions as opposed to bot activity.

In conclusion, being able to differentiate between real traffic and bot traffic is essential for managing analytics effectively, improving marketing strategies, enhancing security measures, optimizing costs, and complying with legal requirements. By leveraging appropriate tools such as bot detection solutions and thorough analysis of web traffic patterns, website owners can make informed decisions that contribute to the growth and sustainability of their online presence.

Can Traffic Bots Deliver Real Value to Websites or Are They a Temporary Fix?traffic bots or automated traffic generation tools have gained popularity among website owners who seek to boost their website traffic quickly and easily. However, there is an ongoing debate regarding the effectiveness and long-term value that traffic bots can deliver to websites. While they may appear tempting as a temporary fix to increase visibility and traffic numbers, there are several factors to consider before investing in these tools.

Can Traffic Bots Deliver Real Value to Websites or Are They a Temporary Fix?traffic bots or automated traffic generation tools have gained popularity among website owners who seek to boost their website traffic quickly and easily. However, there is an ongoing debate regarding the effectiveness and long-term value that traffic bots can deliver to websites. While they may appear tempting as a temporary fix to increase visibility and traffic numbers, there are several factors to consider before investing in these tools.Firstly, it is essential to understand how traffic bots operate. These software programs or scripts simulate human web activity by generating automated visits, clicks, and interactions on websites. They aim to create an illusion of organic traffic and engagement. Unfortunately, the quality of this artificial traffic is often questionable as it lacks genuine human interaction and interest.

Search engines like Google heavily rely on ranking algorithms that prioritize user relevance and credibility. Websites heavily reliant on traffic bots risk violating search engine regulations, resulting in potential penalties such as decreased visibility or even complete removal from search results. These consequences can severely impact a website's overall performance and Online presence.

Furthermore, traffic bots generally fail to deliver real user engagement value. Natural human visitors provide meaningful interactions like genuine comments, feedback, sharing content on social media platforms, and referring others to the website. In contrast, bot-generated visits often lack these human interactions, making them less likely to result in conversions or a loyal customer base. Ultimately, relying solely on bot traffic may lead to skewed analytics and revenue disappointment.

Additionally, modern internet users are becoming increasingly savvy at detecting bot-generated activity. Bots typically exhibit predictable patterns that discerning individuals can identify as unnatural. When visitors suspect or discover false data behind a website's traffic numbers or activity, it can erode trust and damage the website's reputation among its target audience.

It is worth noting that utilizing bot-driven traffic may have some validity in certain short-term scenarios where immediate boosts are necessary but should never be relied upon as a sustainable strategy for long-term success. Websites aiming for genuine growth and engagement should focus on organic methods such as search engine optimization (SEO), content creation, social media marketing, and building an authentic community of users.

In summary, while traffic bots may offer a temporary solution to gain site visibility and numbers, the long-term benefits they provide are highly questionable. Real value is created through legitimate human traffic, genuine user engagement, and organic growth strategies. Thus, it is wise for website owners to steer clear of relying on traffic bots and instead invest time and effort into sustainable practices that can establish a valuable online presence over time.

Debating the Legality: Are Traffic Bots Breaking Internet Laws?Debating the Legality: Are traffic bots Breaking Internet Laws?

Debating the Legality: Are Traffic Bots Breaking Internet Laws?Debating the Legality: Are traffic bots Breaking Internet Laws?In the evolving landscape of the internet, traffic bots have become a contentious subject when it comes to legality. These automated scripts or software programs are designed to generate website traffic and improve metrics such as page views or clicks. However, their usage often raises questions about ethical and legal boundaries. While opinions may vary, let's explore some key arguments commonly debated in terms of whether traffic bots are breaking internet laws.

One prominent argument suggests that traffic bots can potentially be illegal by violating various laws related to computer systems and online activities. Many jurisdictions have legislation in place that prohibits unauthorized access to computer systems or networks. Deploying traffic bots could be seen as an attempt to exploit the vulnerabilities of websites, software, or network infrastructure, rendering them susceptible to legal action under these statutes.

Additionally, many countries have enacted laws to combat fraud or deceptive practices that define activities like click fraud (artificially inflating click numbers for financial gain) as illegal. Traffic bots may often engage in such deceptive practices to generate revenue through ad networks or affiliate programs, conflicting with industry regulations.

Another aspect upholding the illegality argument lies in breaching specific platform terms of service or usage agreements. Platforms like Google or Facebook explicitly state that the use of automated tools to manipulate their services is strictly prohibited. Such violation can lead to severe consequences, including account suspension, penalties, or even legal action.

However, opposing views suggest that not all uses of traffic bots deserve categorization as illegal acts. There are instances where traffic bot usage is legitimate and within legal bounds. Organizations and website owners commonly employ legitimate bot traffic for data analytics, performance testing, competitive intelligence gathering, or load balancing purposes. In these scenarios, the intention is not malicious but rather aims at improving user experience or optimizing web infrastructure.

Furthermore, some proponents argue that clarity within legislation is required for unequivocal determination of bot practices. Current legal frameworks might not be simultaneously applicable worldwide, leading to diverging interpretations and uneven law enforcement. Efforts towards establishing more precise guidelines regarding traffic bots and their legality should be made.

It is worth acknowledging that while traffic bots can have detrimental effects on ad campaigns, user experience, or data integrity, creating blanket statements categorizing them as purely legal or illegal may be oversimplifying the issue. Each case requires thorough scrutiny based on intent, usage context, applicable legislation, and platform-specific rules.

In conclusion, the question of whether traffic bots break internet laws is a complex one, with strong arguments on both sides. Their potential for malicious activities and breaching legal boundaries cannot be ignored. However, certain use cases do exist where traffic bots are legitimate and justified. Clarifications in legislation and platform regulations would be beneficial to set clearer boundaries and guide future practices in this constantly evolving realm.

Techniques to Protect Your Website from Malicious Traffic BotsTechniques to Protect Your Website from Malicious traffic bots

Techniques to Protect Your Website from Malicious Traffic BotsTechniques to Protect Your Website from Malicious traffic bots1. Implement CAPTCHA: Include a simple CAPTCHA mechanism on your website to verify that the user is human. This can help identify and block malicious bots that try to automate tasks or access your site's resources.

2. Deploy IP filtering: Monitor the incoming traffic and identify patterns of suspicious behavior. By employing IP filtering techniques, you can block traffic coming from suspicious or known bot-infected IP addresses.

3. Utilize User-Agent filtering: User-Agent headers can provide valuable information about the visiting entities. Filtering out non-standard or suspicious User-Agent strings can effectively block known malicious bots before they even hit your site.

4. Leverage JavaScript challenges: Implement JavaScript challenges that would require the client's browser to execute specific operations before accessing restricted parts of your website. Many bots fail to execute JavaScript properly, thereby eliminating their ability to perform malicious activities.

5. Set rate limits: Malicious bots often generate an unusually high number of requests within a short period of time. By imposing rate limits on your server, you can mitigate potential damage caused by such bots and preserve resources for genuine visitors.

6. Implement blacklisting and whitelisting: Leverage blacklisting techniques to block requests from known bot IPs or suspicious domains. Conversely, use whitelisting as an added layer of security to only allow traffic originating from trusted sources.

7. Monitor server logs: Regularly analyze server logs and examine access patterns for any signs of suspicious activity. Look for recurring IP addresses, unusual request frequencies, or sudden spikes in web traffic as indicators of possible bot presence.

8. Use content delivery networks (CDNs): Employing CDNs can help offload excess traffic and provide distributed caching mechanisms, which may detect and filter out malicious bot requests more efficiently than traditional server setups.

9. Stay updated on security patches: Keep your website's operating system, CMS, plugins, or any other related software up to date. Security vulnerabilities in outdated versions can make your site an easy target for malicious bots.

10. Employ machine learning-based solutions: Advanced security solutions leveraging machine learning algorithms can help identify and mitigate bot traffic. By constantly analyzing traffic patterns, these solutions can adapt and improve over time, providing better protection against evolving malicious bot threats.

Remember that website security is an ongoing effort. Regularly review security measures, stay informed about emerging bot threats, and adapt your defensive strategies accordingly to keep your website safeguarded from malicious traffic bots.

Understanding CAPTCHA and Its Effectiveness Against BotsCaptchas are typically encountered on various websites and online platforms as a means to determine whether a user is human or a traffic bot. It stands for "Completely Automated Public Turing test to tell Computers and Humans Apart." The primary purpose of a captcha is to prevent automated bots from performing unwanted or malicious actions such as spamming, data scraping, or gaining unauthorized access.

Understanding CAPTCHA and Its Effectiveness Against BotsCaptchas are typically encountered on various websites and online platforms as a means to determine whether a user is human or a traffic bot. It stands for "Completely Automated Public Turing test to tell Computers and Humans Apart." The primary purpose of a captcha is to prevent automated bots from performing unwanted or malicious actions such as spamming, data scraping, or gaining unauthorized access.Unlike humans, bots struggle to accurately decipher captchas due to their complex and distorted nature. Captchas often involve text displayed in distortions, which humans can usually perceive and interpret correctly, while bots find it challenging.

One of the most common types of captchas is "Text-based Captchas" where users must enter the characters they see on the screen into a form. These captchas also sometimes include easily recognizable objects or symbols. The idea behind this approach is that algorithms used by bots have a hard time recognizing characters under different variations, rotations, or blurriness.

Another type of captcha known as "Image Recognition Captcha" relies on presenting the user with images and requesting them to identify certain objects within those images. Bots face difficulties in recognizing and processing visual content with high accuracy compared to humans.

There is also a newer form called "Behavior-based Captcha" that requires users to perform specific tasks that can be achieved seamlessly by humans but prove challenging for automated bots. This may include sliders that need to be adjusted in a specific manner or checkboxes where users are asked to solve logical puzzles alongside traditional captcha patterns.

Despite their effectiveness against most bots, captchas are not without flaws. Advanced OCR (Optical Character Recognition) algorithms developed by bot creators can occasionally bypass text-based captchas since they excel in optical character recognition regardless of image distortions.

Sometimes captchas might be designed in a way that makes them too difficult for even some humans to decipher, creating accessibility challenges for visually impaired users or individuals who struggle with visual perception. This aspect highlights the importance of properly designing captchas considering user inclusivity.

In conclusion, captchas play a vital role in verifying users' humanity, protecting websites and online platforms from automated bots. Their effectiveness largely relies on the complexity and variations that make it harder for bots to comprehend. While captchas are generally efficient, certain forms can better cater to both security requirements and user accessibility by combining traditional text-based approaches with more innovative behavioral patterns.

How Do Social Media Platforms Deal with Bot Traffic?Social media platforms have been grappling with the issue of bot traffic bot for quite some time now. Bots, also known as automated software programs, are designed to perform various actions on these platforms, often without human intervention. While some bots serve legitimate purposes, like content curation or customer service, many others engage in malicious activities, such as spamming, amplifying fake engagement, spreading misinformation, or even engaging in social engineering attacks.

How Do Social Media Platforms Deal with Bot Traffic?Social media platforms have been grappling with the issue of bot traffic bot for quite some time now. Bots, also known as automated software programs, are designed to perform various actions on these platforms, often without human intervention. While some bots serve legitimate purposes, like content curation or customer service, many others engage in malicious activities, such as spamming, amplifying fake engagement, spreading misinformation, or even engaging in social engineering attacks.To tackle this challenge, social media platforms employ a variety of measures to detect and deal with bot traffic. These measures typically involve a combination of manual and automated techniques aimed at identifying and filtering out malicious bot accounts. Here are some common strategies adopted by social media platforms:

1. Account Verification: Many social media platforms have implemented account verification systems to ensure that users are real individuals and not bots. This often involves a user verifying their identity in some way, such as providing phone numbers or email addresses.

2. User Behavior Analysis: Social media platforms closely analyze user behavior patterns and employ algorithms that can identify suspicious activities indicative of bot behavior. They monitor parameters like the number of posts, likes, shares, follows, or how quickly these actions are performed within a short time span. Unusually high activity levels or repetitive behavior patterns may trigger further scrutiny.

3. Pattern Recognition: With advanced machine learning algorithms, platforms can detect repetitive patterns that may indicate bot activity. These patterns encompass characteristics like posting at consistent intervals with similar content, following or unfollowing large quantities of accounts simultaneously, or sending automated direct messages to multiple users.

4. IP Address Monitoring: Social media platforms track IP addresses from which users log in. They compare these addresses across different accounts to identify instances where multiple accounts originate from the same IP address— particularly when these accounts display similar behaviors— suggesting potential bot activity.

5. Captchas: Platforms often make use of "completely automated public Turing test to tell computers and humans apart" (CAPTCHA) checks to verify if a user is human. Captchas commonly involve visual or audio challenges that computers typically struggle with, thereby helping to filter out bot traffic.

6. Machine Learning Models: Many social media platforms utilize machine learning models to continuously refine their ability to detect and combat bot traffic. These models learn from user activity patterns, engagement metrics, historical data, and feedback from users to identify and disrupt bot accounts.

7. User Reporting: Social media platforms rely on their large user bases to report suspicious accounts or activities. Users can effectively flag accounts that display unusual behavior, such as sending spam messages or constantly posting suspicious content. In such cases, platforms investigate the reported accounts manually to determine if they are indeed bots.

8. Bot Countermeasures: Some platforms deploy specific defenses like rate limiting, API access restrictions, bulk action prevention mechanisms, or introducing stringent policies against using third-party applications that automate certain activities. By complicating the bot developer experience or restricting access, these countermeasures aim to discourage or hinder bot creators.

9. Collaborative Efforts: Larger social media platforms provide avenues for collaboration with security researchers, enabling them to contribute findings regarding bot behavior, new attack techniques, and vulnerabilities. This cooperation helps enhance the platforms' ability to tackle diverse bot threats effectively.

While social media platforms make concerted efforts against bot traffic, it remains an ongoing battle due to evolving bot technologies and their sellers' adaptability. However, the dedication of platforms towards leveraging advancements in artificial intelligence and adopting proactive strategies demonstrates a commitment to this complex issue impacting their ecosystems.

Case Studies: When Using Traffic Bots BackfiredCase Studies: When Using traffic bots Backfired

Case Studies: When Using Traffic Bots BackfiredCase Studies: When Using traffic bots BackfiredTraffic bots, also known as clickbots or botnets, are automated programs designed to simulate human web traffic. People often use these bots with the hope of increasing website traffic, improving search engine optimization (SEO), and boosting advertising revenue. However, there have been significant cases where using traffic bots had unintended and negative consequences. Here are some real-life examples of situations when these experiments backfired:

1. Burdened Server Infrastructure: One common issue arises when traffic bot usage exceeds what the server infrastructure can handle. As these programs generate massive amounts of artificial traffic, servers become overwhelmed, leading to slow loading times, website crashes, and poor user experiences. Such incidents can harm a platform's reputation and contribute to a loss in genuine traffic.

2. Ad Fraud Detection: Online advertisers track website visit statistics to determine ad effectiveness and allocate their budgets accordingly. However, when traffic consists predominantly of fake visits generated by bots, it becomes virtually impossible for advertisers to measure ad performance accurately. Consequently, this devalues advertisement campaigns and undermines trust between advertisers and publishers.

3. Search Engine Penalties: Search engines like Google closely monitor website traffic behavior to assess its relevance for ranking purposes. Excessive bot-driven traffic can raise red flags and trigger penalties against websites. These penalties can result in diminished organic search visibility or temporary suspension from search engine results altogether due to a violation of webmaster guidelines.

4. Wasted Advertising Budgets: Some individuals employ traffic bots to drive visitors to their websites and monetize their platforms through advertising networks that typically assume higher user engagement leads to increased earnings. When detected by ad networks, however, fraudulent traffic generated by bots leads to wasted advertising budgets as revenue expectations remain unmet due to low user engagement rates.

5. Damage to Credibility: Many online businesses rely on transparency and trust-building initiatives with audiences. Deploying traffic bots erodes this credibility, as it deceptively represents user interest and engagement, thus compromising the authenticity expected from the platform. Dubious data can harm a brand's image, result in lost customers, and impact long-term viability.

Surrounding the controversial use of traffic bots, several instances have highlighted their adverse effects on websites, businesses, and users. Implementing such blackhat strategies violates ethical guidelines and exposes individuals to significant reputational damage. Understanding these case studies underlines the importance of relying on legitimate strategies to establish sustainable growth while safeguarding your website's integrity, ensuring genuine user interactions, and preserving trust within the digital ecosystem.

Future Perspectives: The Role of Bots in Digital Marketing EvolutionIn the rapidly changing landscape of digital marketing, bots are playing an increasingly vital role. These automated software programs, known as traffic bots, have the power to simulate human-like behavior online, consequently influencing website traffic, engagement, and conversion rates. As we delve into the future perspective of bots in digital marketing evolution, it is crucial to understand their far-reaching implications and potential advantages.

Future Perspectives: The Role of Bots in Digital Marketing EvolutionIn the rapidly changing landscape of digital marketing, bots are playing an increasingly vital role. These automated software programs, known as traffic bots, have the power to simulate human-like behavior online, consequently influencing website traffic, engagement, and conversion rates. As we delve into the future perspective of bots in digital marketing evolution, it is crucial to understand their far-reaching implications and potential advantages.First and foremost, bots offer unparalleled efficiency and scalability in driving website traffic. With their ability to automate tasks such as searching, browsing, and ad clicking, they can significantly boost the number of visitors to a website within a short span of time. Additionally, bots excel in reducing human errors and optimizing processes involved in digital marketing campaigns. This translates to better overall performance and more accurate data analysis.

Moreover, bots possess immense potential in enhancing customer engagement through personalized interactions. Natural language processing capabilities enable them to craft customized responses based on user inquiries, preferences, and behavior patterns. Consequently, brands can achieve higher customer satisfaction by addressing concerns promptly and providing tailored recommendations or solutions.

Another significant advantage of bots lies in their capacity to streamline lead generation and nurture relationships with potential customers. By automating lead qualification processes, these intelligent programs assist marketers in segregating high-quality leads from low-converting ones. Furthermore, bots can deliver targeted messages at specific stages of the customer journey, nurturing prospects through automated email responses or instant messaging assistance.

As AI technology continues to advance, bots are set to revolutionize digital marketing analytics further. They facilitate advanced data collection and analysis by monitoring user behavior, extracting relevant insights from patterns, and identifying trends that human marketers might overlook. This empowers marketers with actionable intelligence to optimize their campaigns in real-time and make informed decisions for better results.

However, it is essential to recognize the potential challenges that come with the increased use of traffic bots. One concerning aspect is the potential for misuse and unethical practices like exploiting vulnerabilities in online advertising systems or engaging in fraudulent activities to deceive users. To ensure bot-driven marketing strategies adhere to ethical standards, companies should prioritize transparency and comply with the evolving regulations surrounding their use.

In conclusion, bots are poised to play a pivotal role in shaping the future of digital marketing. From driving website traffic and enhancing customer engagement to streamlining lead generation and providing valuable data insights, their capabilities offer a wide range of advantages that can significantly impact businesses' success. With responsible implementation, bots can revolutionize marketing strategies, enabling brands to reach audiences more effectively and efficiently in our ever-evolving digital landscape.

Education for Webmasters: Recognizing Bot Patterns and Safeguarding Your SiteEducation for Webmasters: Recognizing Bot Patterns and Safeguarding Your Site

Education for Webmasters: Recognizing Bot Patterns and Safeguarding Your SiteEducation for Webmasters: Recognizing Bot Patterns and Safeguarding Your SiteIn the ever-changing landscape of the internet, webmasters need to stay ahead of the game by recognizing bot patterns and implementing necessary safeguards to protect their sites. Bots, while often designed with benign intentions, can inadvertently harm your website's performance and compromise your valuable traffic bot data. Educating yourself about these bot patterns is essential in safeguarding your site. Here are some key points to consider:

Understanding bot patterns:

Bot patterns refer to distinct characteristics displayed by bots while interacting with websites. By recognizing these patterns, you can make informed decisions about whether to allow or restrict their access. Some bots are legitimate, like search engine crawlers that help index and rank your web pages appropriately. However, there are others driven by malicious intent that can cause harm by spamming forms, scraping content, or executing DDoS attacks.

Differentiating human visitors from bots:

Identifying automated bots from genuine human visitors can be challenging. One way to distinguish between the two is by monitoring the behavioral patterns of website interactions. For instance, if a visitor clicks across multiple pages within seconds or fills out forms unusually quickly and repeatedly, chances are it may be a bot. Analyzing traffic sources and considering geographical information can also provide useful insights into distinguishing humans from bots.

Implementing safeguards:

To protect your site from malicious activity and ensure uninterrupted performance, various safeguards can be employed. One common technique is CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart). CAPTCHA effectively addresses spam attacks by presenting users with tests that only humans can easily fulfill, creating a roadblock for bots attempting to gain access.

Employing tools and services:

Several tools and services are available that aid in recognizing and mitigating bot traffic. Web analytics systems such as Google Analytics offer insights into visited pages, bounce rates, and other metrics that can indicate suspicious behavior. Additionally, utilizing reputable bot detection services, firewalls, and security plugins can provide an extra layer of protection against harmful bots.

Regular monitoring and analysis:

Webmasters should diligently monitor their site's traffic data, looking for irregularities that may point to bot activity. Frequent analysis allows for the prompt detection of unusual behavioral patterns, enabling quick action to safeguard your site from potential threats. Regularly reviewing visitor logs, referral sources, and user agent information can assist in identifying patterns that do not align with genuine human interactions.

In conclusion, education about bot patterns is crucial for webmasters to effectively safeguard their sites. By understanding different bot types, recognizing behavioral variations, and employing necessary safeguards and tools, you can ensure protected access to your website while enhancing overall user experience. Stay vigilant, analyze traffic data regularly, and take appropriate measures to mitigate the risks associated with bot activity.